As businesses grow, so does their internal knowledge base. Especially in the age of remote work, where distributed teams collaborate at a distance, finding the information they need quickly and independently is crucial.

Challenges faced by knowledge workers and managers

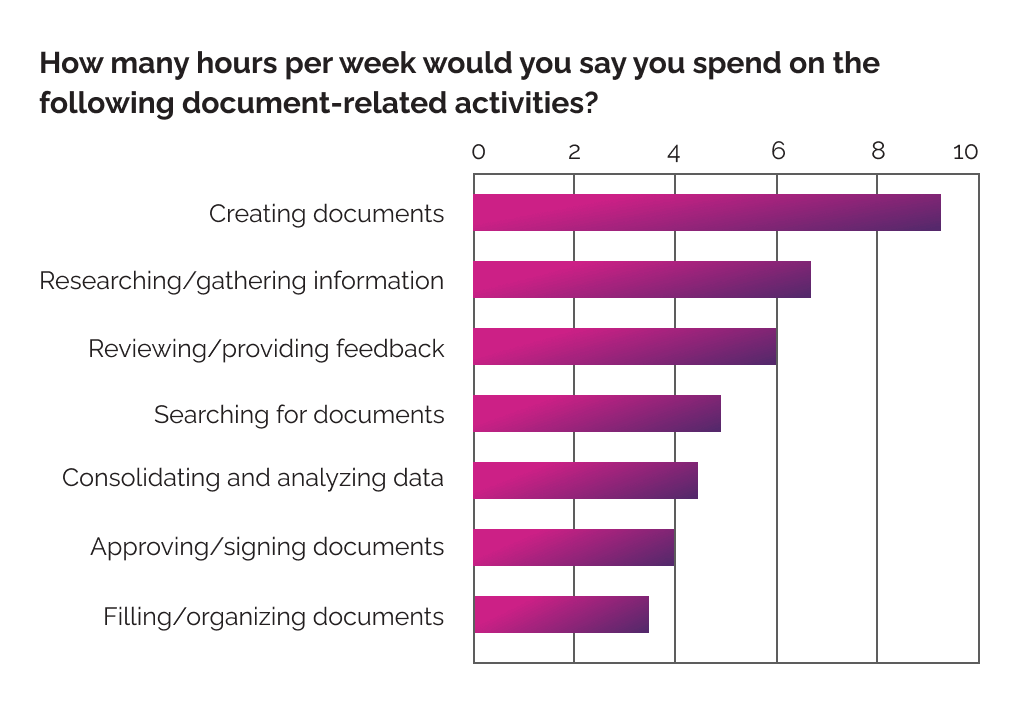

Statistics reveal that employees spend on average 30% of their workday looking for information. Whether it’s questions about company policy or inquiries from a customer, they have to navigate an increasingly complex maze of documents and sources to find answers.

The knowledge workers of today face an unprecedented level of cognitive load. They need to search, gather, and process large volumes of information daily. And they need to do it fast. In fact, preventing cognitive overload has become such a concern that companies are prioritizing it as a core employee experience metric.

However, not just knowledge workers are overwhelmed by information scattered across Google, Notion, Confluence, or various files and folders. Knowledge managers themselves are having a tough time. They have to answer routine questions over and over, point team members to already-documented information, or be available outside their working hours to provide remote support.

Source: Adapted from IDC Information Worker Survey

How is AI transforming knowledge management?

Generative AI has taken the world by storm and everyone recognizes its benefits in terms of efficiency and productivity. However, the benefits for individual knowledge workers and managers may be less clear.

Knowledge workers depend on the free flow of information to do their jobs well and make decisions. When they don’t have proper access to information, they might make decisions that affect the business as a whole.

By automating tasks like organizing content, document retrieval and analysis, text extraction, and search, AI not only supports knowledge work but transforms it entirely.

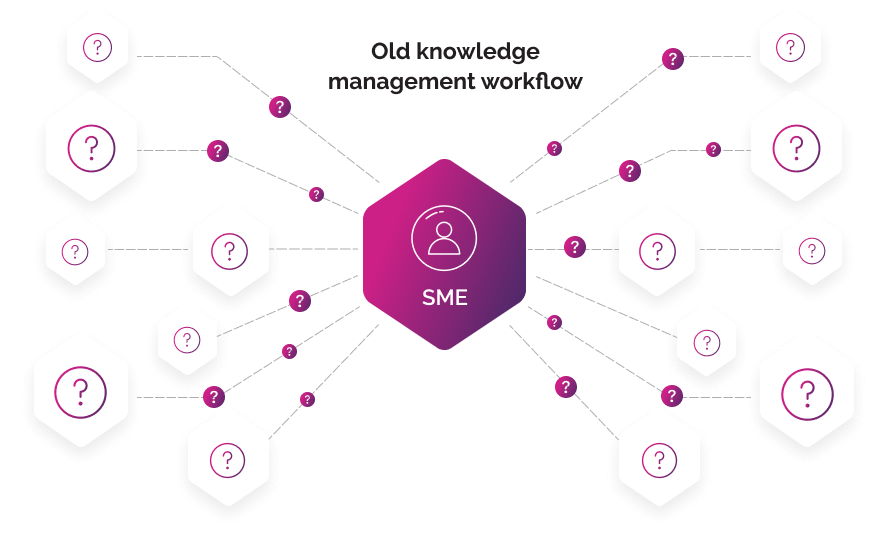

The old knowledge management workflow

Imagine you are a customer support specialist at a rapidly growing e-commerce. Each time a customer calls, you have to put them on hold. Sometimes to find the answers you are looking for you have to check up to 3 or 4 separate systems while the customer is waiting.

Or you’ve just been hired as a project manager in a remote team. You're eager to contribute to your first major project, but soon discover you lack vital onboarding information. Now you have to go through lengthy email threads, ping multiple team members, and wait for their response, which could take hours due to time zone differences.

Managing company knowledge has been a long-standing issue for businesses that need to make information easy to find and access, creating issues such as:

-

Inconsistent delivery. Conflicting or inconsistent information can create confusion and lead to more work-related errors. It also makes employees less likely to trust and use existing knowledge bases, making them a potentially wasted investment.

-

Knowledge siloes. Knowledge is often lost between departments or when an experienced team member leaves the company. When teams fail to share critical information it’s often the customer who suffers the most.

-

Limited scalability. As organizations grow, the demand for employee support also grows. Knowledge managers need to answer an ever-increasing number of questions, taking time away from more significant aspects of their role.

-

Employee frustration and knowledge manager burnout. Unable to find what they are looking for, employees may go back to asking questions in chat, feeling frustrated in the process. On the other end, Subject Matter Experts (SMEs) may be unable to keep up with the requests, leading to burnout and lower performance due to frequent interruptions.

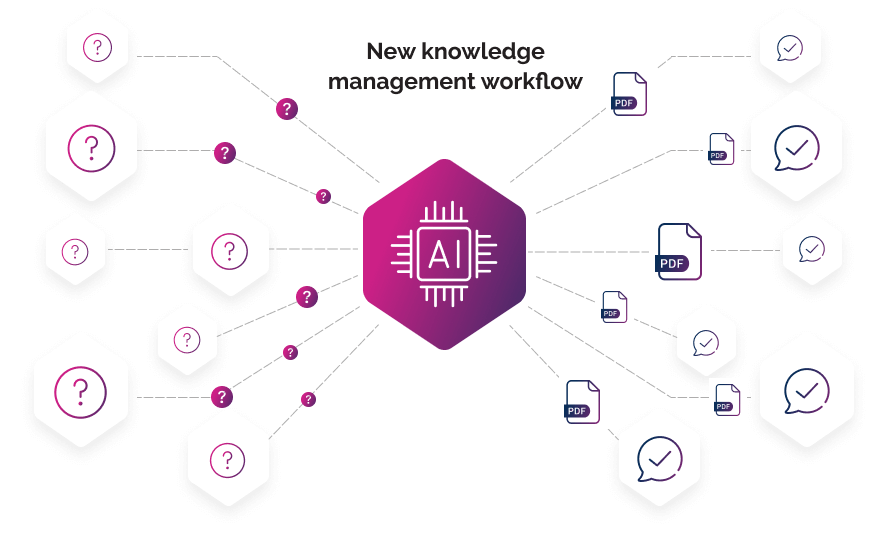

The AI-powered knowledge management workflow

Due to the recent breakthroughs in AI technology, solving the issue of ineffective knowledge management has become easier. Organizations are discovering renewed value in their existing knowledge bases by integrating them with generative AI through the use of LLM (Large Language Models) and chatbots.

In this new workflow, organizations can capture internal knowledge and use existing data (wikis, intranet, handbooks, customer profiles) to curate content that will be fed to AI. Employees can then type in domain-specific questions and get instant answers directly from their internal docs with the help of an AI chatbot.

What is an AI knowledge base?

Unlike a traditional knowledge base which is a collection of static articles and documents, an AI knowledge base dynamically retrieves information. It’s a centralized repository that has been structured and optimized both for human use and machine understanding. It can surface the most relevant information on demand taking into account the user’s context and relationships between data.

There are several key advantages to this approach:

-

You can integrate information that wasn’t available during the AI's initial training and personalize it with your company’s knowledge.

-

By using techniques like Retrieval Augmented Generation (RAG) you don’t have to retrain the model to use your custom data, making it less costly.

-

Unstructured content is notoriously hard to manage although it can provide a lot of insight on how customers think and feel. Through Machine Learning algorithms, AI knowledge bases can organize and analyze unstructured interactions like for example support chat history or emails, providing assistance to contact centers and support agents.

-

AI knowledge bases help companies keep content up to date, onboard new team members without extensive training, and significantly cut down knowledge siloes and the time needed to retrieve information.

What are the main components of an AI knowledge base?

To understand AI knowledge bases more in-depth, let’s look at a few core technologies and components.

Data sources: The foundation of any knowledge base is its data repository, which can cover a wide array of documents, including FAQs, product details, customer interaction logs, or guides. This is where AI retrieves and builds responses.

Natural Language Processing (NLP): When a user submits a query to the knowledge base, Natural Language Processing enables AI to not only understand the question but to translate it into a format the system understands.

However, NLP goes beyond human-AI interaction, serving a variety of information-organizing purposes. Through semantic analysis, AI can tag, organize, and understand semantic relationships between content to improve the relevancy and accuracy of information retrieval.

Natural Language Interface: Chatbots serve as a critical interface in AI knowledge bases, allowing users to ask questions in their own words and get back context-sensitive answers. Through Natural Language Processing, chatbots can interpret the user’s intent, consider conversation history, and provide only the most relevant information.

Integrations: Chatbots can integrate through APIs with other services or tools to meet users where they are, whether it’s a CRM, the company’s website, or a collaboration platform like Slack and Microsoft Teams.

How we built an AI employee assistant from our company’s knowledge base. Key steps and takeaways

Recognizing a lot of the pain points mentioned so far, we embarked on a pilot project where we use the capabilities of generative AI to provide better employee support and improve knowledge management practices within our company. We decided to start with a small-scale implementation designed to test the viability of the approach before moving on to the next steps.

The pilot project

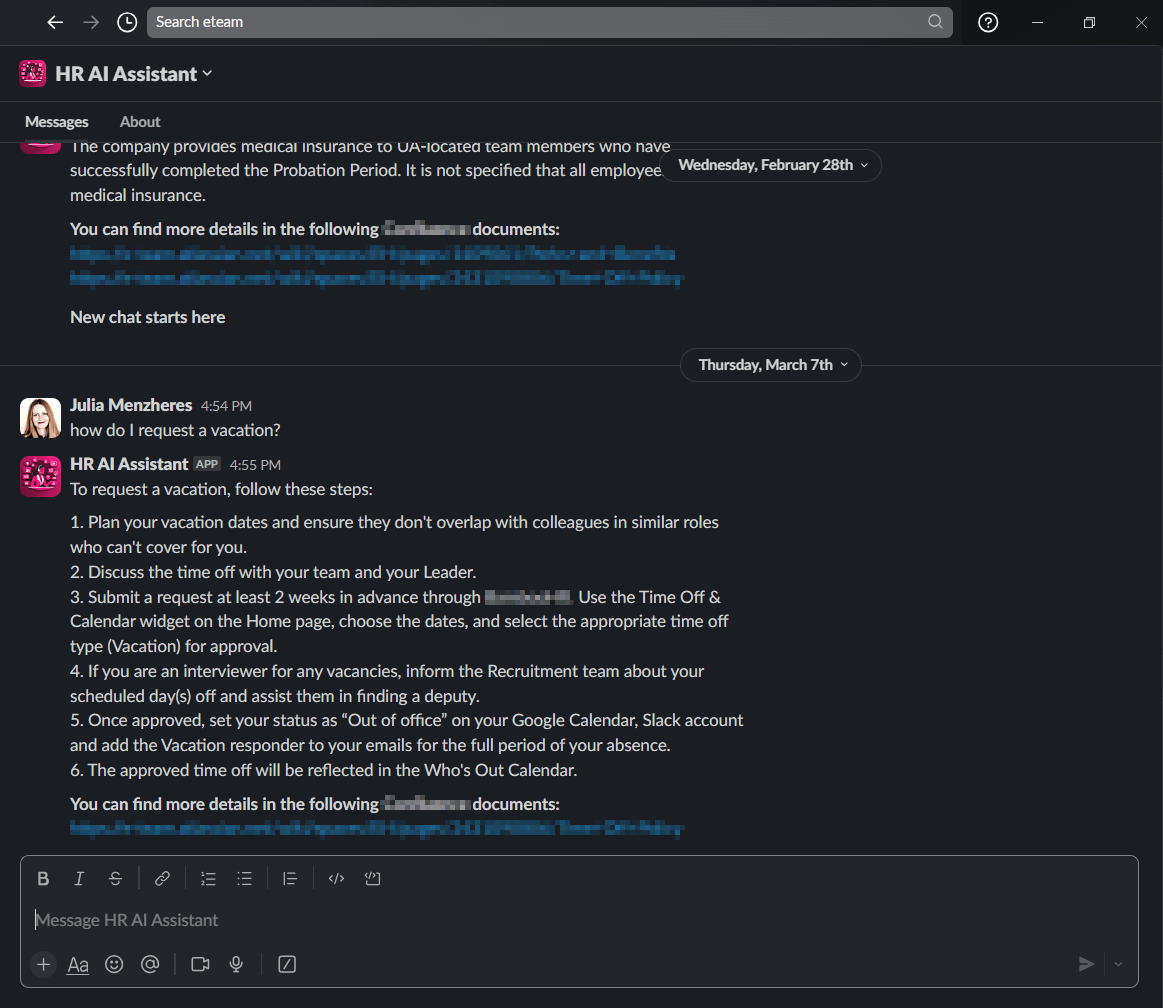

In this initial phase, we focused exclusively on our internal HR policies and procedures. The pilot project uses the capabilities of LLMs, integrated with a Slack chatbot we built to address HR-related questions based on our employee handbook and documentation. The goal of the pilot project is to:

Test feasibility and scalability. Explore the ability of AI tools to automate HR support before implementing them in other departments and domains of organizational knowledge.

Standardize information delivery. Ensure consistency in HR communications by delivering accurate answers based on company guidelines and policies.

Enhance employee experience. Support employees with the answers they need and check in with how they are feeling through employee mood statuses.

Optimize HR workload. Answer frequently asked questions through the AI employee assistant, allowing the HR team to focus on higher-value tasks.

For example, just by typing in “How do I request a vacation” you get an AI-generated list of steps you need to take according to the internal time off policy. The chatbot can also give you direct links to those specific documents the answer was generated from.

Methodology and development steps

To build the AI employee assistant and allow it to generate answers straight from our company’s HR documentation, we had to undergo several development steps - including extensive research into the appropriate tech stack.

If you want to dive deeper into the technical aspects, we have a separate upcoming article focused on comparing different LLM models and the architectural approach we took.

Here is a high-level overview of the steps:

-

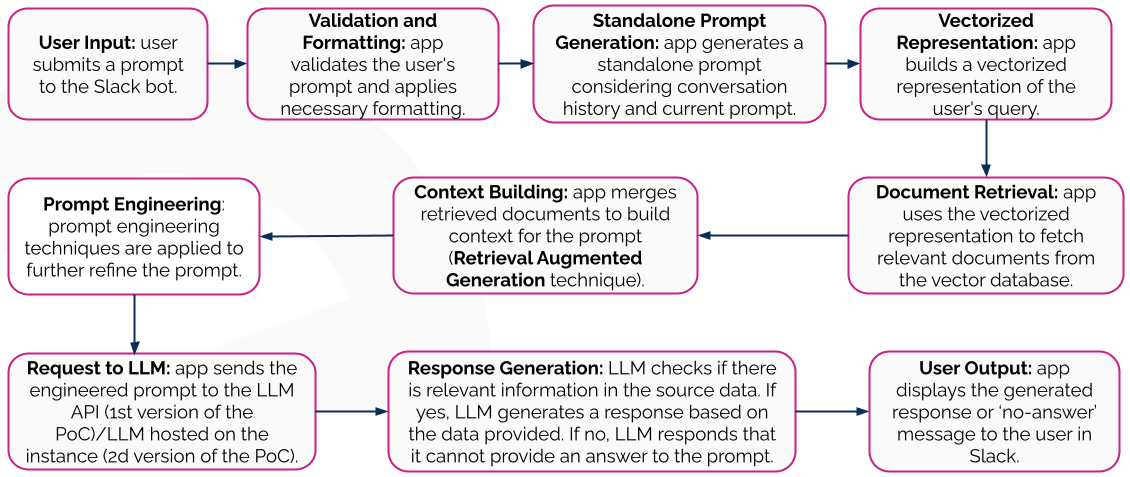

Research and compare LLM models. LLMs are the underlying technology that enables AI assistants to understand, process, and generate responses. To find the best fit for our project, we researched and compared some of the most popular paid and open-source LLM models.

-

Implement Retrieval Augmented Generation (RAG). RAG extends the already powerful capabilities of LLMs to incorporate an organization’s internal knowledge. Rather than generating responses based on the information it was trained on, RAG enables LLMs to use new knowledge without retraining.

-

Store data as vector embeddings. Techniques like RAG require a storage system where information is stored as vector embeddings. This transforms words and sentences into numerical representations that capture their meaning and interconnections.

-

Engineer AI prompts. Prompts instruct the model on how it should act when receiving a question, including what to do when it cannot find the information users are looking for.

-

Integrate the AI assistant with Slack. As we were already using it as our main collaboration platform, we integrated the AI chatbot with Slack to provide instant answers without switching tools.

How does the AI employee assistant work?

The diagram below shows the application flow. It starts with the user asking the Slack AI chatbot a question and continues with the bot communicating with the LLM model to build context based on the retrieved documents.

Main benefits of using AI in knowledge management

The pilot project resulted not only in a solution capable of addressing a wide range of HR-related queries but also in a matter-of-fact view of how AI is impacting knowledge management.

While knowledge management is still deeply rooted in documentation, the shift to hybrid and remote work revealed significant gaps in existing practices. With the help of AI, organizations are beginning to close these gaps. Here are some of the benefits we found:

Increased efficiency: The employee AI assistant reduces HR workload by up to 50%, taking charge of routine questions so the team can focus on strategic activities instead. Employees themselves spend less time searching for information and browsing countless documents.

24/7 availability: With an always-on assistant, answers are available at your fingertips directly in chat, regardless of time zone differences or schedule, providing better support to distributed teams.

Scalability. AI systems can easily accommodate growing volumes of data and user questions without a proportional increase in the complexity of managing such workloads.

Personalization. By tailoring the information presented to users based on their role, search intent, or past interactions, AI systems can improve employee experience.

Consistency. Generating answers straight from internal docs means all users will receive accurate and up-to-date information regardless of their location or department.

Multi-language support. AI can be trained to understand and respond to queries in different languages, breaking down language barriers and preventing misunderstandings.

Effortless onboarding. Immediate access to relevant information significantly cuts down the time needed to train and onboard new team members, including transitioning between roles.

Workplace well-being. Implementing AI to proactively track employee mood can help identify early signs of burnout, leading to a healthier work environment and better retention rates.

Empower your business with generative AI today

Knowledge management is one of the most critical internal business processes and, at the same time, one of the most challenging. It requires a delicate balance between filtering out the noise and ensuring employees can quickly find the necessary information without being overwhelmed.

Add to this the fact that knowledge resources constantly change and become easily outdated, and the challenge increases twofold.

At ETEAM we help organizations build powerful enterprise solutions and improve business processes through generative AI. Our AI and Machine Learning experts efficiently deploy the power of LLM models and Natural Language Processing to enrich the capabilities of software products or existing infrastructure, including your internal knowledge base.

Are you ready to automate business processes, enhance employee and customer experience, and drive tangible results? Let’s discuss how AI can make a difference for your business.